Tag: RAG

-

MIT’s SASA Method: Training LLMs to Self-Detoxify Their Language Output

MIT researchers have developed SASA, a method allowing Large Language Models to detoxify their own outputs without retraining. This system creates internal boundaries between toxic/non-toxic subspaces, helping LLMs generate appropriate content while maintaining natural language fluency—similar to how humans develop internal filters for appropriate speech.

-

Enhancing Netflix Recommendations with FM-Intent: Predicting User Session Intent

Netflix has developed FM-Intent, an advanced recommendation model that predicts user intent during viewing sessions. By understanding whether users want to discover new content, continue watching shows, or explore specific genres, FM-Intent delivers 7.4% more accurate recommendations than previous systems, creating a more personalized streaming experience.

-

Mastering RAG: A Guide to Evaluation and Optimization

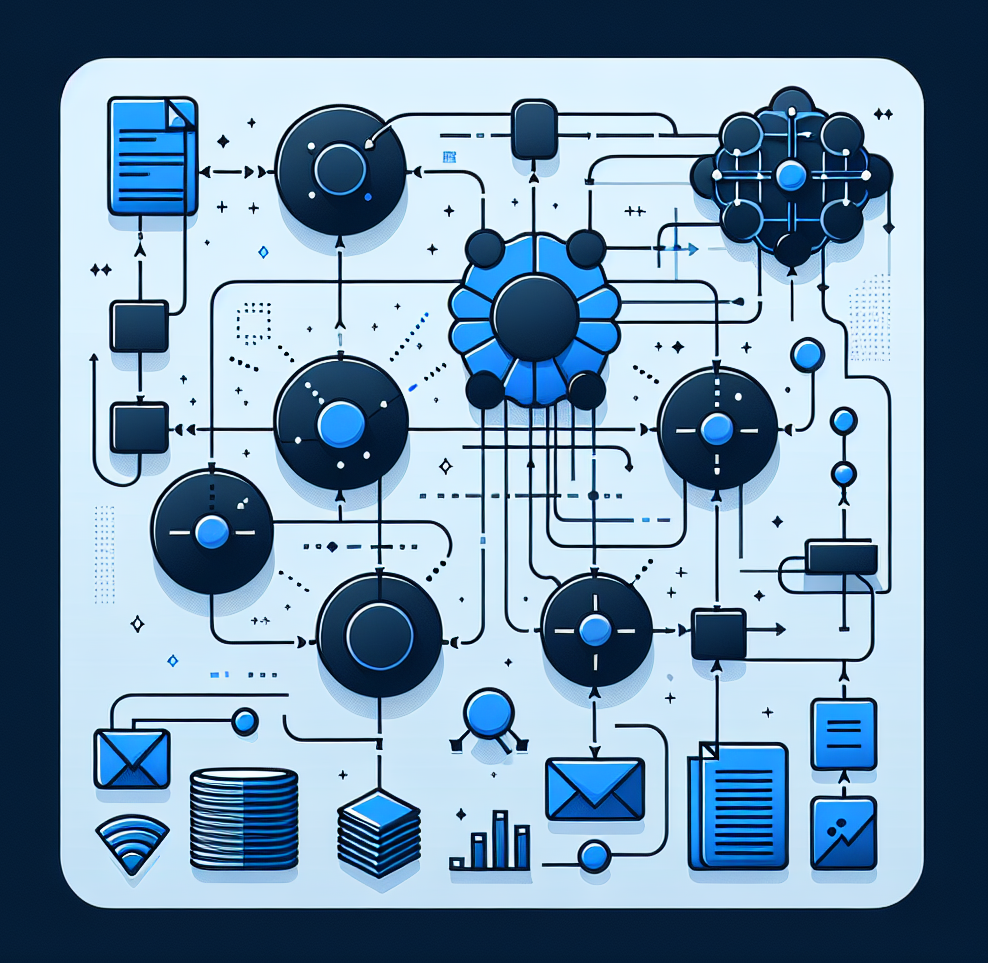

Discover strategies for evaluating and optimizing Retrieval-Augmented Generation (RAG) systems. Learn about testing frameworks, evaluation metrics, and the crucial balance between automated testing and human evaluation for optimal performance.