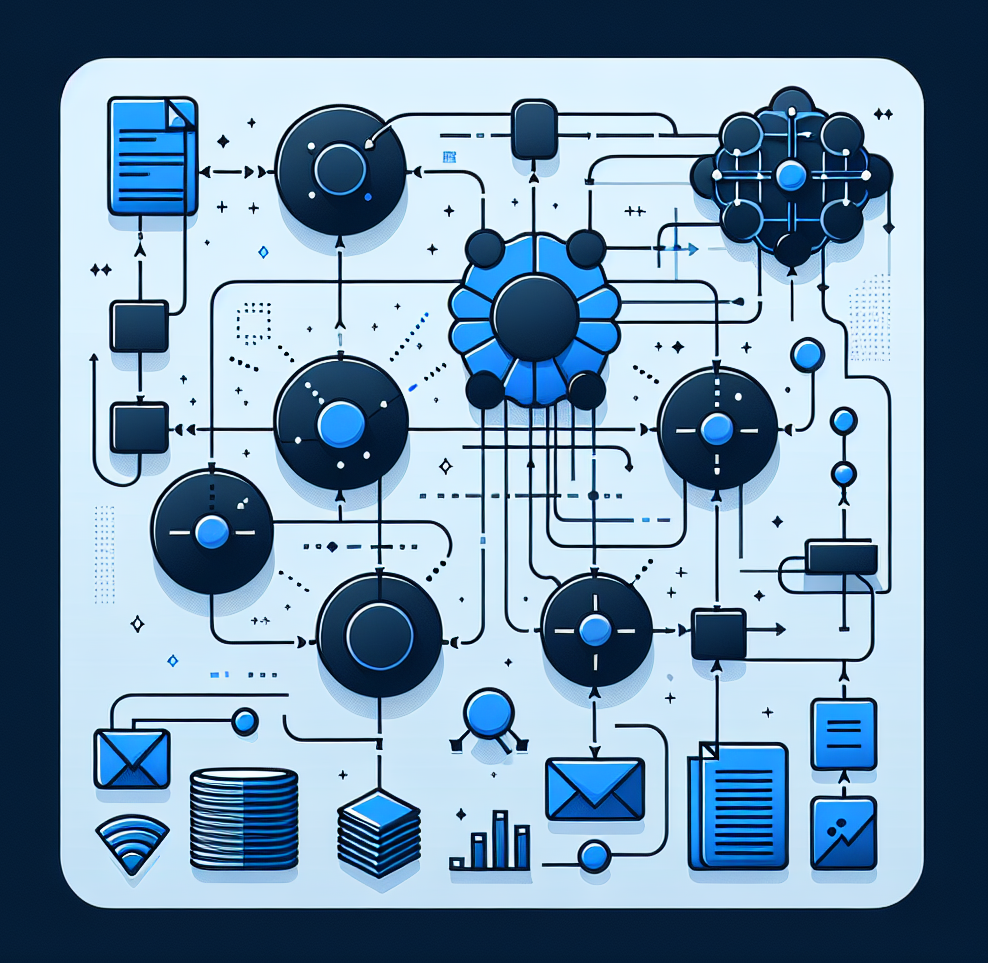

Understanding RAG and Its Importance

Retrieval-augmented generation (RAG) enhances large language models by connecting them with real-time and specialized data. While the concept seems straightforward, proper implementation requires careful attention to evaluation and testing.

Creating a Robust Testing Framework

A successful RAG implementation starts with a comprehensive testing framework. Here are the key components:

- Develop high-quality test datasets covering diverse use cases

- Create golden reference datasets for output evaluation

- Implement systematic variable testing

- Choose appropriate evaluation metrics

Evaluation Tools and Metrics

Modern RAG evaluation leverages powerful frameworks:

- Ragas: An open-source tool measuring factual accuracy and relevance

- Vertex AI Gen AI: Supports custom metrics and comprehensive evaluation

Root Cause Analysis

Effective RAG optimization requires systematic testing of:

- Optimal number of document neighbors

- Embedding model selection

- Chunking strategies and sizes

- Document metadata enrichment

Human Evaluation Component

While automated metrics are valuable, human evaluation provides crucial insights into:

- Response tone and clarity

- User experience and satisfaction

- Real-world applicability

Successful RAG implementation requires a balance of automated testing and human evaluation, ensuring both technical accuracy and practical usability.

Click here to learn more about RAG optimization on Google Cloud’s official blog